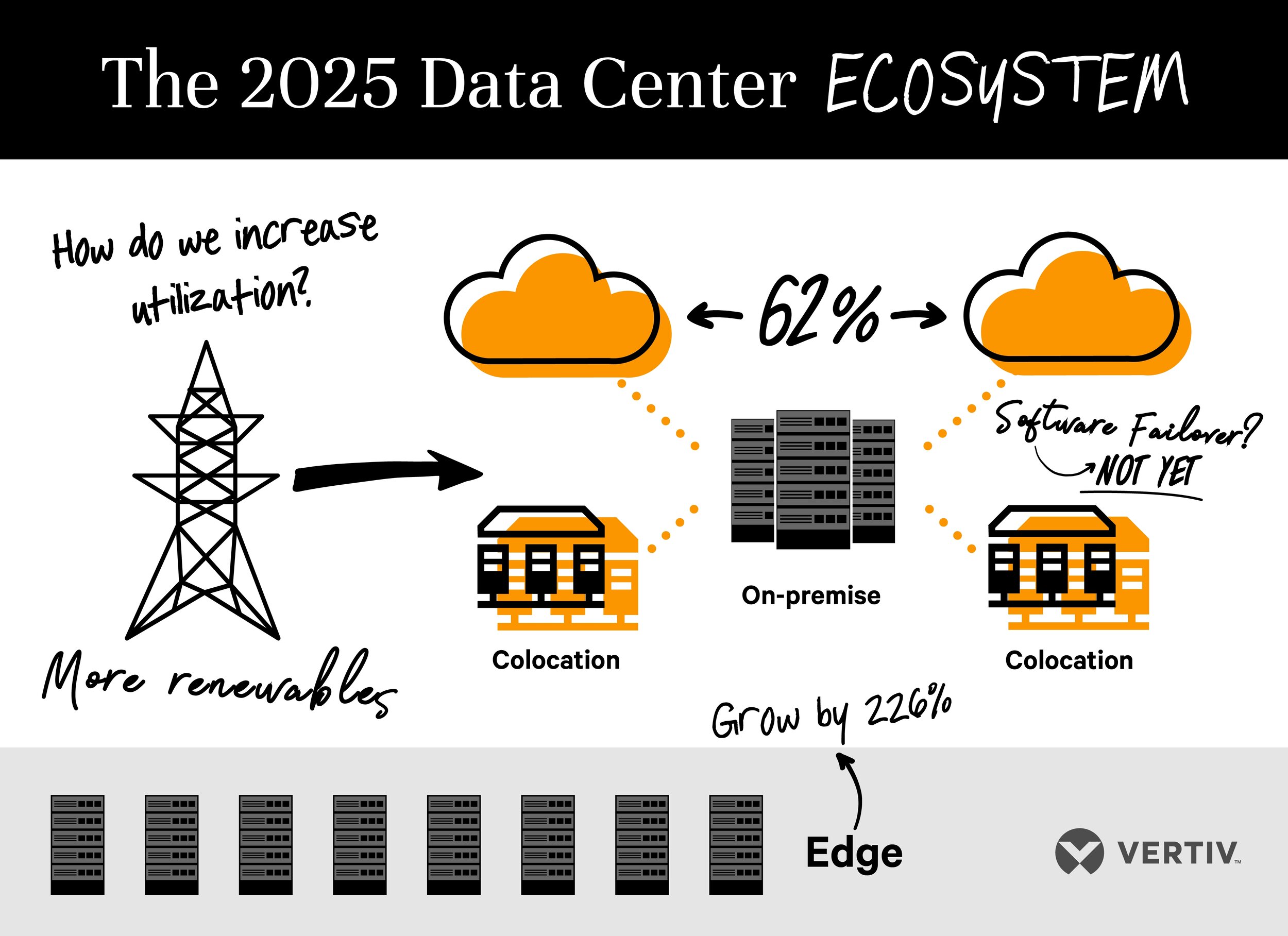

Enterprise IT strategists are adapting to new demands from the industrial edge, 5G networks, and hybrid deployment models that will lead to more diverse data centers across more business settings.

That’s the message from a broad new survey of 150 senior IT executives and data center managers on the future of the data center. IT leaders and engineers say they must transform their data centers to leverage the explosive growth of data coming from nearly every direction.

Yet, according to the Forbes-conducted survey, only a small percentage of businesses are ready for the decentralized and often small data centers that are needed to process and analyze data close to its source.

The next BriefingsDirect discussion on the latest data center strategies unpacks how more self-healing and automation will be increasingly required to manage such dispersed IT infrastructure and support increasingly hybrid deployment scenarios.

Listen to the podcast. Find it on iTunes. Read a full transcript or download a copy.

Joining us to help learn more about how modern data centers will efficiently extend to the computing edge is Martin Olsen, Vice President of Global Edge and Integrated Solutions at VertivTM. The interview is conducted by Dana Gardner, Principal Analyst at Interarbor Solutions.

Here are some excerpts:

Gardner: Martin, what’s driving this movement away from mostly centralized IT infrastructure to a much more diverse topology and architecture?

Olsen: It’s an interesting question. The way I look at it is it’s about the cloud coming to you. It certainly seems that we are moving away from centralized IT or centralized locations where we process data. It’s now more about the cloud moving beyond that model.

We are on the front steps of a profound re-architecting of the Internet. Interestingly, there’s no finish line or prescribed recipe at this point. But we need to look at processing data very, very differently.

Over the past decade or more, IT has become an integral part of our businesses. And it’s more than just back-end applications like customer relationship management (CRM), enterprise resource planning (ERP), and material requirements planning (MRP) systems that service the organization. It’s also become an integrated fabric to how we conduct our businesses.

Meeting at the edge

Gardner: Martin, Cisco predicts there will be 28.5 billion connected devices by 2022, and KPMG says 5G networks will carry 10,000 times more traffic than current 4G networks. We’re looking at an “unknown unknown” here when it comes to what to expect from the edge.

Olsen: Yes, that’s right, and the starting point is well beyond just content distribution networks (CDNs), it’s also about home automation, so accessing your home security cameras, adjusting the temperature, and other things around home automation.

That’s now moving to business automation, where we use compute and generate data to develop, design, manufacture, deploy, and operate our offerings to customers in a much better and differentiated fashion.

We’re also trying to improve the customer experience and how we interact with consumers. So billions of devices generating an unimaginable amount of data out there, is what has become known as edge computing, which means more computing done at or near the source of data.

In the past, we pushed that data out for consuming, but now it’s much more about data meets people, it’s data interacting with people in a distributed IT environment. And then, going beyond that is 5G.

We see a paradigm shift in the way we use IT. Take the amount of tech that goes into manufacturing. It’s exploding, with tens of thousands of sensors deployed in just one facility to help dramatically improve productivity and drive efficiency into the business.

We see a paradigm shift in the way we use IT. Take, for example, the amount of tech that goes into a manufacturing facility, especially high-tech manufacturing. It’s exploding, with tens of thousands of sensors deployed in just one facility to help dramatically improve productivity, differentiate, and drive efficiency into the business.

Retail operations, from a compute standpoint, now require location services to offer a personalized experience in both the pre-shop phase as well as when you go into the store, and potentially in the post-shop, or follow-up experience.

We need to deliver these services quickly, and that requires lower latency and higher levels of bandwidth. It’s increasingly about pushing out from a central standpoint to a distributed fashion. We need to be rethinking how we deploy data centers. We need to think about the future and where these data centers are going to go. Where are we going to be processing all of this data?

Where does the data go?

Gardner: The complexity over the past 10 years about factoring cloud, hybrid cloud, private cloud, and multi-cloud is now expanding back down into the organization — whether it’s an environment for retail, home and consumer, and undoubtedly industrial and business-to-business. How are IT leaders and engineers going to update their data centers to exploit 5G and edge computing opportunities despite this complexity?

Olsen: You have to think about it differently around your physical infrastructure. You have the data aspect of where data moves and how you process it. That’s going to sit on physical infrastructure somewhere, and it’s going to need to be managed somehow.

Learn How Self-Healing and Automation

Help Manage Dispersed IT Infrastructure

You should, therefore, think differently about redesigning and deploying the physical infrastructure. How do you operate and manage it? The concept of a data center has to transform and evolve. It’s no longer just a big building. It could be 100, 1,000, or 10,000 smaller micro data centers. These small data centers are going to be located in places we had previously never imagined you would put in IT infrastructure.

And so, the reliance on onsite technical and operational expertise has to evolve, too. You won’t necessarily have that technical support, a data center engineer walking the halls of a massive data center all day, for example. You are going to be in places like some backroom of a retail store, a manufacturing facility, or the base of a cell tower. It could be highly inaccessible.

You’ll need solutions that offer predictive operations, that have self-healing capabilities within them where they can fail in place but still operate as a function of built-in redundancy. You want to deploy solutions that have zero-touch provisioning, so you don’t have to go to every site to set it up and configure it. It needs to be done remotely and with automation built-in.

You should also consider where the applications are going to be hosted, and that’s not clear now. How much bandwidth is needed? It’s not clear. The demand is not clear at this point. As I said in the beginning, there is no finish line. There’s nothing that we can draw up and say, “This is what it’s going to be.” There is a version of it out there that’s currently focused around home automation and content distribution, and that’s just now moving to business automation, but again, not in any prescribed way yet.

You should consider where the applications are going to be hosted, and that’s not clear. How much bandwidth is needed? It’s not clear. There’s nothing that we can draw up and say, “This is what it’s going to be.”

So we don’t want to adopt the “right” technologies now. And that becomes a real concern for your ability to compete over time because you can outdate yourself really, really quickly if you don’t make the right choices.

Gardner: When you face such change in your architecture and potential decentralization of micro data centers, you still need to focus on security, backup and recovery, and contingency plans for emergencies. We still need to be mission-critical, even though we are distributed. And, as you point out, many of these systems are going to be self-healing and self-configuring, which requires a different set of skills.

We have a people, process, and technology sea change coming. You at Vertiv wanted to find out what people in the field are thinking and how they are reacting to such change. Tell us about the Vertiv-Forbes survey, what you wanted to accomplish, and the top-line findings.

Survey says seek strategic change

Olsen: We wanted to gauge the thinking and gain a sense of what the C-suite, the data center engineers, and the data center community were thinking as we face this new world of edge computing, 5G, and Internet of things (IoT). The top findings show a need for fundamental strategic change. We face a new mixture of architectures that is far more decentralized and with much more modularity, and that will mean a new way to manage and operate these data centers, too.

Based on the survey, 11 percent of C-suite executives don’t believe they are currently updated even to be ahead of current needs. They certainly don’t have the infrastructure ready for what’s needed in the future. It’s much less so with the data center engineers we polled, with only 1 percent of them believing they are ready. That means the vast majority, 99 percent, don’t believe they have the right infrastructure.

There is also broad agreement that security and bandwidth need to be updated. Concern about security is a big thing. We know from experience that security concerns have stunted remote monitoring adoption. But the sheer quantity of disparate sites required for edge computing makes it a necessity to access, assess, and potentially reconfigure and remotely fix problems through remote monitoring and access.

Vertiv is driving a high level of configurability of instruments so you can take our components and products and put them together in a multitude of different ways to provide the utmost flexibility when you deploy. We are driving modularized solutions in terms of both modular data center and modularity in terms of how it all goes together onsite. And we are adding much more intelligence into our offerings for the remote sites, as well as the connectivity to be able to access, assess, and optimize these systems remotely.

Gardner: Martin, did the survey indicate whether the IT leaders in the field are anticipating or demanding such self-configuration technologies?

Olsen: Some 24 percent of the executives reported that they expect more than 50 percent of data centers will be self-configuring or have zero-touch provisioning by 2025. And about one-third of them say that more than 50 percent of their data centers will be self-healing by then, too.

That’s not to say that they have all of the answers. That’s their prediction and their responses to what’s going to be needed to solve their needs. So, 29 percent of engineers say they don’t know what percentage of the data centers will be self-configuring and self-healing, but there is an overwhelming agreement that it is a capability they need to be thinking about. Vertiv will develop and engineer our offerings going forward based on what’s going to be put in place out there.

Gardner: So there may be more potential points of failure, but there is going to be a whole new set of technologies designed to ameliorate problems, automate, and allow the remote capability to fix things as needed. Tell us about the proper balance between automation and remote servicing. How might they work together?

Make intelligent choices before you act

Olsen: First of all, it’s not just a physical infrastructure problem. It has everything to do with the data and workloads as well. They go hand-in-hand; it certainly requires a partnership, a team of people and organizations that come together and help.

Driving intelligence into our products and taking that data off of our systems as they operate provides actionable data. You can then offer that analysis up to non-technical people on how to rectify situations and to make changes.

Learn How Self-Healing and Automation

Help Manage Dispersed IT Infrastructure

These solutions also need to communicate with the hypervisor platforms — whether that’s via traditional virtualization or containerization. Fundamentally, you need to be able to decide how and when to move your applications and workloads to the optimal points on the network.

We are trying to alleviate that challenge by making our offerings more intelligent and offering up actionable alarms, warnings, and recommendations to weigh choices across an overall platf

orm. Again, it takes a partnership with the other vendors and services companies. It’s not just from a physical infrastructure standpoint.

Gardner: And when that ecosystem comes together, you can provide a constellation of data centers working in harmony to deliver services from the edge to the consumer and back to the data centers. And when you can do that around and around, like a circuit, great things can happen.

So let’s ground this, if we can, to the business reality. We are going to enable entirely new business models, with entirely new capabilities. Are there examples of how this might work across different verticals? Can you illustrate — when you have constructed decentralized data centers properly — the business payoffs?

Improving remote results

Olsen: As you point out, it’s all about the business outcomes we can deliver in the field. Take healthcare. There is a shortage of healthcare expertise in rural areas. Being able to offer specialized doctors and advanced healthcare in places that you wouldn’t imagine today requires a new level of compute and network that delivers low latency all the way to the endpoints.

Imagine a truck fitted with a medical imaging suite. That’s going to have to operate somewhat autonomously. The 5G connectivity becomes essential as you process those images. They have to be graphically loaded into a central repository to be accessed by specialists around the world who read the images.

That requires two-way connectivity. A huge amount of data from these images needs to move to provide that higher level of healthcare and a better patient experience in places where we couldn’t do it before.

There will need to be aggregation points throughout the network. You will need compute to reside at multiple levels of the infrastructure. Places like the base of a cell tower could become the focal point.

So 5G plays into that, but it also means being able to process and analyze some of the data locally. There need to be aggregation points throughout the network. You will need compute to reside at multiple levels of the infrastructure. Places like the base of a cell tower could become a focal point for this.

You can imagine having four, five, six times as much compute power sitting in these places along a remote highway that is not easily accessible. So, having technical staff be able to troubleshoot those becomes vital.

There are also uses cases that will use augmented reality (AR). Think of technicians in the field being able to use AR when they dispatch a field engineer to troubleshoot a system somewhere. We can make them as effective as possible, and access expertise from around the world to help troubleshoot these sites. AR becomes a massive part of this because you can overlay what the onsite people are seeing in through 3D glasses or virtual reality glasses and help them through troubleshooting, fixing, and optimizing whatever system they might be working on.

Again, that requires compute right at the endpoint device. It requires aggregation points and connectivity all the way back to the cloud. So, it requires a complex network working together. The more advanced these use cases become — the more remote locations we have to think through — we are going to have to deploy infrastructure and access it as well.

Gardner: Martin, when I listen to you describe these different types of data centers with increased complexity and capabilities in the networks, it sounds expensive. But are there efficiencies you gain when you have a comprehensive design across all of the parts of the ecosystem? Are there mitigating factors that help with the total cost?

Olsen: Yes, as the net footprint of compute increases, I don’t think the cost is linear with that. We have proven that with the Vertiv technologies we have developed and already deployed. As the compute footprint increases, there is a fundamental need for driving energy efficiency into the infrastructure. That comes in the form of using more efficient ways of cooling the IT infrastructure, and we have several options around that.

It’s also from new battery technologies. You start thinking about lithium-ion batteries, which Vertiv has solutions around. Lithium-ion batteries make the solution far more resilient, more compact, and it needs much less maintenance when it sits out there.

Learn How Self-Healing and Automation

Help Manage Dispersed IT Infrastructure

So, the amount of infrastructure that’s going to go out there will certainly increase. We don’t think it’s necessarily going to be linear in terms of the cost when you pay close attention to how, as an organization, you deploy edge computing. By considering these new technologies, that’s going to help drive energy efficiency, for example.

Gardner: Were there any insights from the Forbes survey that went to the cost equation? How do the IT executives expect this to shake out?

Energy efficiency partnerships

Olsen: We found that 71 percent of the C-suite executives said that future data centers will reduce costs. That speaks to both the fact that there will be more infrastructure out there, but that it will be more energy efficient in how it’s run.

It’s also going to reduce the cost of the overall business. Going back to the original discussion around the business outcomes, deploying infrastructure in all these different places will help drive down the overall cost of doing business.

It’s an energy efficiency play both from a very fundamental standpoint in the way you simply power and cool the equipment, and overall, as a business, in the way you deliver improved customer experience and how you deliver products and services for your customers.

Gardner: How do organizations prepare themselves to get out in front of this? As we indicated from the survey findings, not that many say they are prepared. What should they be doing now to change that?

Olsen: Yes, most organizations are unprepared for the future — and not necessarily even in agreement on the challenges. A very small percentage of the respondents, 11 percent of executives believe that their data centers are ahead of current needs, even less so for the data center engineers. Only 44 percent of them say that their data centers are updated regularly. Only 29 percent say their data centers even meet current needs.

To prepare going forward, they should seek partnerships. Get the data centers upgraded, but also think through and understand how organizations like Vertiv have decades of experience in designing, deploying, and operating large data centers from a physical infrastructure standpoint. We use that experience and knowledge base for the data center of tomorrow. It can be a single IT rack or two going to any location.

We take all of our learning and experience and drive it into what becomes the smallest common denominator data center, which could just be a rack. These are modular solutions that are intelligent and can be optimized remotely.

We take all of that learning and experience and drive it into what becomes the smallest common denominator data center, which could just be a rack. So it’s about working with someone who has that experience, already has the data, and has the offerings of configurable, modular solutions that are intelligent and provide accessibility to access, assess, and optimize remotely. And it’s about managing the data that comes off these systems and extracts the value out of it, the way we do that with some of our offering around Vertiv LIFE Services, with very prescriptive, actionable alarms and alerts that we send from our systems.

Very few organizations can do this on their own. It’s about the ecosystem, working with companies like Vertiv, working closely with our strategic partners on the IT side, storage networks, and all the way through to the applications that make it all work in unison.

Think through how to efficiently add compute capacity across all of these new locations, what those new locations should look like, and what the requirements are from a security standpoint.

There is a resiliency aspect to it as well. In harsh environments such as high-tech manufacturing, you need to ensure the infrastructure is scalable and minimizes capital expenditure spending. The modular approach allows building for a future that may be somewhat unknown at this point. Deploying modular systems that you can easily augment and add capacity or redundancy to over time — and that operate via robust remote management platforms — are some of the things you want to be thinking about.

Gardner: This is one of the very few empirical edge computing research assets that I have come across, the Vertiv and Forbes collaboration survey. Where can people find out more information about it if they want more details? How is this going to be available?

Learn How Self-Healing and Automation

Help Manage Dispersed IT Infrastructure

Olsen: We want to make this available to everybody to review. In the interest of sharing the knowledge about this new frontier, the new world of edge computing, we will absolutely be making this research and study available. I want to encourage people to go visit vertiv.com to find more information and download the research results.

Listen to the podcast. Find it on iTunes. Read a full transcript or download a copy. Sponsor: Vertiv.

You may also be interested in:

-

A new status quo for data centers–seamless communication from core to cloud to edge

-

How smart IT infrastructure has evolved into the era of data centers-as-a-service

-

As hybrid IT complexity ramps up, operators look to data-driven automation tools

-

The next line of defense—How new security leverages virtualization to counter sophisticated threats

-

Expert Panel Explores the New Reality for Cloud Security and Trusted Mobile Apps Delivery

-

How IT innovators turn digital disruption into a business productivity force multiplier

-

Citrix and HPE team to bring simplicity to the hybrid core-cloud-edge architecture